Forging Clarity in the Age of AI

“Je n’ai fait celle-ci plus longue que parce que je n’ai pas eu le loisir de la faire plus courte.” -- Blaise Pascal

I made this letter longer because I didn’t have time to make it shorter.

Clarity and conciseness! Of thought, strategy, design, code. It’s a thing of beauty, but also practicality.

Concise clarity comes at the end, forged through initial naivety, confusion, and contradiction. You iterate through numerous tradeoffs and decisions. You know you’ve arrived not through a formula, but through intuition and taste.

Good marriages and relationships aren’t built by reading the manual. Good health isn’t attained by watching exercise videos. Concise clarity isn’t obtained by outsourcing the process to AI. Rather, it’s forged empirically. Compounding, first slowly, then quickly. Starting fast doesn’t mean you’ll finish ahead.

You become a good writer with pen or keyboard in hand. You become a good programmer by striking keys. You become good at business by running one. You practice, you fail, you learn. You eliminate noise until you’re left with the necessary. The result is beauty you feel but can’t quite describe. Not beauty in the aesthetic sense, more like rightness. When a thing is so well-fitted to its purpose that it feels inevitable, like it couldn’t be any other way. Christopher Alexander called it “the quality without a name.” That’s clarity.

LLMs can help. They speed up research, synthesize information, surface ideas. But at the core, they’re statistical next-word generators, best used as copilots. That doesn’t minimize their transformative potential, rather it sharpens our understanding of how to best incorporate them into our lives.

Here are a few things I learned

Don’t let the LLM write for you. Writing isn’t about the outcome; it’s about the thinking process you take to reach it. You can’t separate writing from thinking. To write is to think. Use the LLM to surface information as needed, then do the work of integrating it. Run it through for editing and final tweaks, but only once you’ve reached clarity and “rightness” yourself.

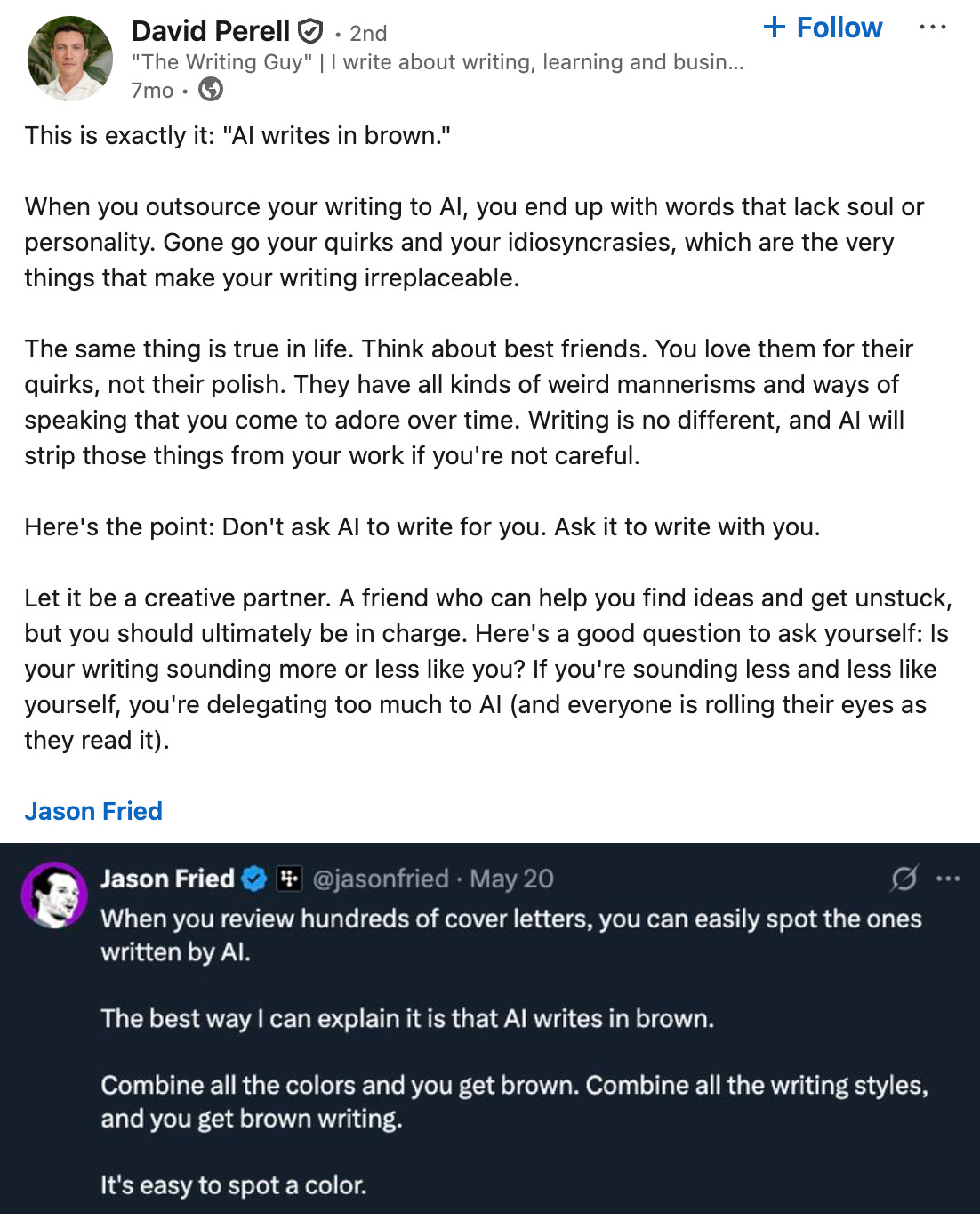

Human connection thrives on quirks, not polish. But LLMs write in “brown.” These days, I can easily spot when someone wholesale outsourced their writing and thinking to AI.

I’m still refining how to best use LLMs to enhance my writing, coding, and problem-solving. Right now, I lean toward making it more manual. I start with a blank page. I don’t want to be immediately influenced. I want to clear my mind first. I write, then ask for editing advice, then read through and decide what to incorporate. It’s been hit or miss on editing, so I don’t see myself moving to “Here’s what I wrote, can you rewrite it?” any time soon.

When coding, I do the same. LLMs are great at bootstrapping a new repo, but that’s when I stop letting it control the keyboard and go back to asking pointed questions, deciding what to incorporate. The results have been mixed. Sometimes it works, other times we iterate through many variations of my prompt without a satisfying result of clarity. But it’s sure an amazing context-aware auto complete of code blocks and sometimes functions.

I find the synthesis abilities of LLMs superb. Whether synthesizing research or explaining a codebase I didn’t write, it does a great job saving time. But I also wonder if skipping the effort to grind through it myself is detrimental to longer-term learning and results. Remember, growth comes slow, then fast :-)

I like the "AI writes in brown" analogy which comes from the stochastic generation process at the core of LLMs - they don't actually "write".

I remember how amazed I was when I got to see my father's first needle (!) printer in his office. Man, did I waste a lot of ink and paper drawing pictures. But, of course, it doesn't draw it simulates the process through individual dots. That process has become a lot better over time but it still means much lower fidelity compared to actually drawing a picture - meaning you see the difference when you zoom in 100x.

That loss of fidelity is particularly acute with genAI "writing". If what you are trying to convey is truly important, then why would you accept that loss in fidelity?

As a reader -- whether consuming a LI post or a GitHub issue I pass over anything anything that's simply AI generated. Why? It puts all the work on me as the reader to make sense of what's being shared and to test it for accuracy and completeness. In very few cases is that an acceptable burden to put on the reader.